Jeff Bartell and Zach Kaiser collaborated on this project.

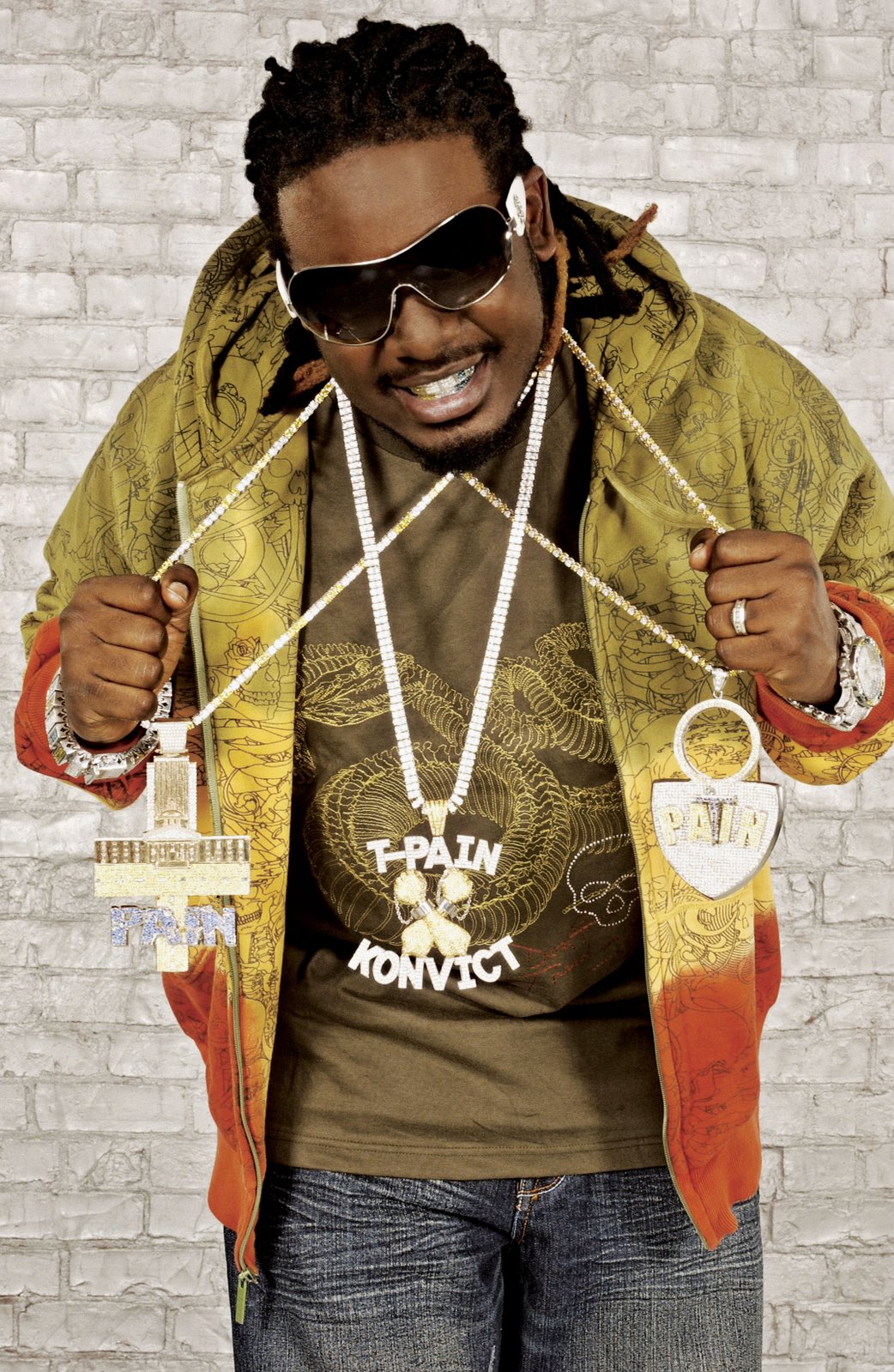

The goal of our project was to research the musical and cultural implications of the recording software plug-in, Auto-Tune; a relatively new tool has taken the musical world by storm over the past 12 years. Our research highlights, and seeks to present the qualitatively new phenomena that have been created by its ubiquitous use in music and Internet culture.

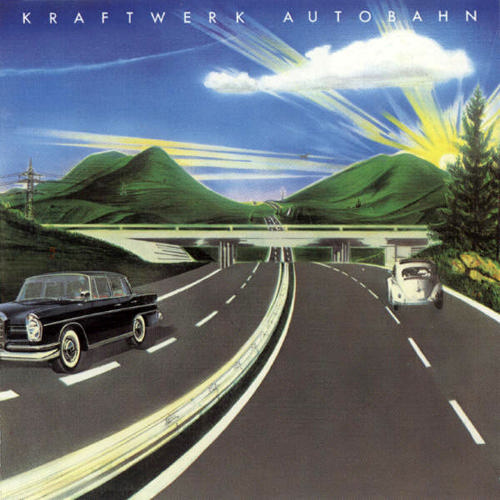

To research Auto-Tune, we found it pertinent to delve into the history of the digitized voice, focusing mainly on the development and eventual worldwide musical usage of the “vocoder” in the twentieth century. Beginning as a military device to encode the voice in order to allow un-tapped conversations between the United States and England, the vocoder eventually made its way into mainstream music in the 1970s. The vocoder allows a musician to add a robotic sound to their vocals. The sound is popular and hasn’t left popular music since. The vocoder is considered to be one of the key technological predecessors to Auto-Tune.

Auto-Tune also allows for a musician to add a robotic sound to their vocals, but it does much more. The software’s algorithms (the same that allowed Exxon to detect seismic waves when searching for oil in the ocean in the early 1990s), can detect the pitch a person is singing, analyze it, and modify it. This feature can essentially make someone without natural vocal talent sing on pitch and in-tune with a song. Amazingly, this setting can be applied to live performed vocals. What kind of impact does such a phenomena have on the music industry? What kind of impact does such a phenomena have on our culture in general? Is the language of music making and performance changing?

Additionally, one of the modes in the Auto-Tune software allows a user to create musical outputs out of non-musical inputs. The software contains an interface that allows a user to bring in speech and to map specific notes that speech. In essence, someone with enough talent can change instances of human speech (famous speeches, news clips, and even the cry of an infant baby) into catchy songs to be spread on the Internet. What implications might this new way of communicating create for our society? How might this feature of Auto-Tune software change the way we receive information in the future?

When we began the project, we each feared that subjecting ourselves to hours and hours of Auto-Tune media would either leave us either jaded, or create additional disdain about the current state of popular music in general. However, we were pleasantly surprised that our research had an opposite effect on us. The more quality examples of music and projects we experienced over the course of our research, the more hopeful we became about Auto-Tune’s role in our culture. If there is anything the history of music and art have taught us, it is that artists control the greatness of their work and not the tools. We now have a positive outlook on the adoption of Auto-Tune into the musical landscape.

Please listen to the podcast for examples and further insight into our research on this topic.

Listen online